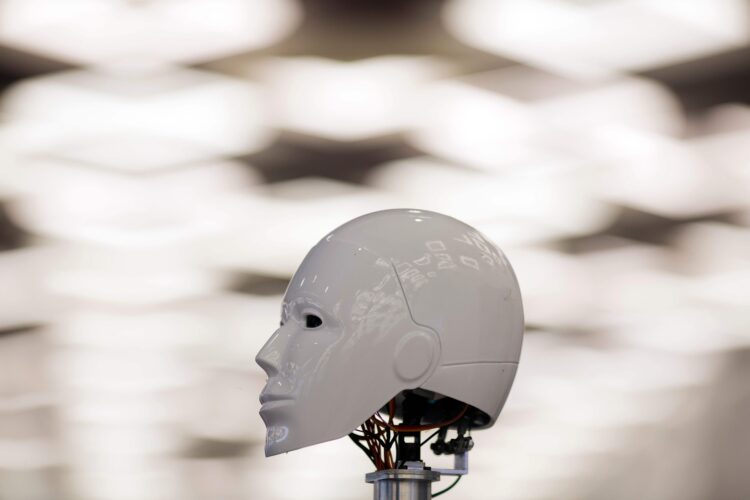

As advancements in artificial intelligence continue to accelerate, Silicon Valley insiders have reportedly been openly assessing the possibility of an AI-driven apocalypse, even developing a metric to define the odds of humanity’s destruction.

According to a New York Times report, discussions of the “probability of doom”—represented as p(Doom) in mathematical terms—have gone mainstream among AI critics and enthusiasts alike, reflecting a growing concern about the new technological frontier. Per the Times, the expression gained popularity amid the 2023 AI boom, which included OpenAI’s ChatGPT passing the bar exam and image generators winning art competitions.

Once an inside joke among AI researchers online, the use of p(Doom) in everyday conversation has apparently become commonplace among tech sector insiders, with personal assessments of AI threats now serving as a popular conversational icebreaker. AI optimists often report a score of near zero, while techno-pessimists have been known to predict a doomsday scenario with as much as 90 percent certainty.

Learn the benefits of becoming a Valuetainment Member and subscribe today!

Geoffrey Hinton, a prominent researcher who quit Google last year and began warning of the risks of unchecked AI expansion, now gives a 10 percent chance to the possibility of artificial intelligence exterminating mankind within the next 30 years.

Yoshua Bengio, considered one of “the godfathers of deep learning,” puts his p(Doom) ranking even higher at around 20 percent.

Emmet Shear, who briefly served as CEO for OpenAI after Sam Altman’s brief ousting late last year, put his p(Doom) assessment as high as 50 percent, but quickly downplayed his concerns to avoid looking like a “doomer” to company staff who feared he would hinder progress.

Related: Air Canada Declares AI is Legally Autonomous in Customer Refund Dispute

While many in Silicon Valley see this metric as subjective and purely theoretical, others have accepted it as a legitimate guideline for research and policy. Moreover, it highlights the extent of the debate raging even among tech sector elites, pitting those who think AI is moving too fast against those who want it to move even faster.

Aaron Levie, CEO of cloud data platform Box, argues that government overreach in the promising field of AI research is a far bigger threat than the intelligence itself. “The overreach is probably[,] if it enters critical policy decisions way too early in the development of AI,” he said, explaining why his personal p(Doom) is 0.

As the Times says, at this point in the process, “you could think of p(Doom) as a kind of Rorschach test — a statistic that is supposed to be about A.I., but that ultimately reveals more about how we feel about humans, and our ability to make use of powerful new technology while keeping its risks in check.”

Connor Walcott is a staff writer for Valuetainment.com. Follow Connor on X and look for him on VT’s “The Unusual Suspects.”

Add comment